Satellite remote sensing

Goal(s)

Main objective

Remote sensing is the term that encompasses the acquisition, among others, of satellite images, since the basis of this technique consists in collecting information about the object to be measured without making physical contact with it, in contrast to on-site observation.

The use of satellite images for monitoring in the different types of infrastructures (example bridges) responds to two great advantages, the great coverage that is achieved in a single analysis and the possibility of recovering historical data, through old images.

These virtues make its use more accessible, compared to jobs where on-site data is more difficult and expensive to obtain.

The satellites that are used to monitor the different infrastructures of the earth have the main objective the observation and mapping the earth's surface.

Description

The data acquisition by remote sensing, through the different satellites, could be by two types, depending on the signal source used to explore the object:

- Active: They generate their own radiation and receive it bounced. Most devices use microwaves because they are relatively immune to weather conditions. Active remote sensing is different in what it transmits (light and waves) and what it determines (for example distance, height, etc.).

- Passive: Receives radiation emitted or reflected by the earth. It depends on the natural energy (solar rays) that bounces off the target. For this reason, it only works in the correct light, if not, there will be nothing to reflect. Passive remote sensing uses multispectral or hyperspectral sensors that measure the amount acquired with multiple combining bands. Those combinations are different by the number of channels (wavelength and more). The scope of the bands includes spectra within and beyond human vision (IR, NIRS, TIRS, microwave, etc.).

Functioning mode

In order to capture the data, the different satellites employ whit electromagnetic waves, those could be [30]:

1) Photographic ultraviolet: with λ between 0.3 to 0.4 µ. Only this portion can be captured with photographic emulsions; the rest are absorbed by the atmosphere and do not reach the earth's surface.

2) Visible: with λ from 0.4 to 0.7 µ. It is the operating range of most image-producing sensors and the best known, since it corresponds to the sensitivity of the human eye, thus facilitating the interpretation of images.

3) Photographic: with λ between 0.3 to 0.9 µ. It corresponds to the sensitivity ranges of the photographic films currently in use. It is located within the atmospheric window between 0.3 to 1.35 µ. It is the range used in multispectral photography.

4) Reflective region: with λ between 0.3 to 3 µ. It corresponds to the capture of radiation reflected by natural bodies at ordinary temperatures of the earth's surface.

5) Emissive region: with λ between 3 and 14 µ. In this region, the sensors capture the energy emitted by bodies as a function of their temperature. It is operated with thermal sensors. It is called I.R. thermal or emissive.

6) Reflective infrared: with λ between 0.7 to 3 µ. It is the region of the I.R. in which the reflected radiation is captured. Photographic systems and multispectral scanners operate there. Two subregions can be considered:

6.a) I.R. close with λ between 1.3 to 3 µ in which high sensitivity photographic emulsions operate and corresponds to an atmospheric window between U.V., the visible and the I.R.

6.b) I.R. medium with λ between 1.3 to 3µ. It is the region where there is the greatest influence of the absorption zones of electromagnetic radiation. The sensors must operate in two atmospheric windows that are between 1.5 to 1.8 µ and 2.0 to 2.4 µ of wavelengths.

7) optical region: with λ from 0.3 to 15 µ. It includes the entire application range of optical systems such as lenses, prisms, mirrors. Multispectral scavengers have the capacity to operate throughout this region.

8) Microwave: with λ between 0.3 to 300 cm. It corresponds to the side-sight radar (SLAR), the synthetic aperture radar (SAR), both active sensors, and the radiometer as a passive sensor.

Inside the class of the passive sensors are the Photographic, the Optical-electronic sensors, (that combine one focus similar to the photographic and one detection electronic system (Push Broom and Whisk Broom Sensors)), imaging and antenna spectrometers (Microwave radiometer). Referred to the sensor’s actives, exist the LIDAR and the RADAR [31].

There are two optical-electronic system types [31]:

- Whisk Broom are the most common in remote sensing. They have a movable mirror that oscillates perpendicular to the direction of the trajectory that allows to explore the swaths of land on both sides. Each movement of the mirror sends information from a different swath to the set of sensors.

- The Push Broom: eliminates the oscillating mirror because it has a chain with many detectors so that they cover the entire field of view of the sensor. This allows increasing the spatial resolution and reducing geometric errors because they eliminate the mobile and less robust part of the Whisk Broom, however they make a more complex calibration since it must be done for all the sensors at the same time to achieve a homogeneous behaviour.

In addition to the Push Broom and Whisk Broom, there is the microwave radiometer [31]:

- The Microwave radiometer are composed of an antenna that functions as a receiver and amplifier element of the microwave signal (because it is too weak) and a detector. In this type of system, the spatial resolution is inversely proportional to the diameter of the antenna and directly proportional to the wavelength. Also, the spatial resolution is worse and should only be applied in global studies.

Finally, the satellites catch the information by two different ways in function of the position form the [31]:

- Geosynchronous or geostationary: they are located on the Equator in an orbit 36000 km from the Earth. They always remain in the vertical of a certain point accompanying the Earth in its rotational movement.

- Heliosynchronous satellites move in generally circular and polar orbits (the plane of the orbit is parallel to the axis of rotation of the Earth) so that, taking advantage of the Earth's rotational motion, it can capture images of different points each time it passes through the same point in the orbit. These orbits are only possible between 300 and 1500 km high. The orbit is designed in such a way that the satellite always passes over the same point at the same local time.

In addition, depending on the orientation with which the sensor captures the images, a distinction is made between sensors of [31]:

- Vertical orientation, typical for satellites of low or medium spatial resolution

- Oblique orientation, typical of radar

- Modifiable orientation appears on high resolution sensors. It allows maintaining a high spatial resolution and having a high temporal resolution as well. Images of the entire earth's surface are no longer taken systematically, but the sensor is oriented on request. The downside is that it is difficult to find images afterwards, since only those images that have been previously ordered are taken.

Types

From the observation of the electromagnetic spectrum, the remote sensing uses certain regions of the electromagnetic spectrum for different systems. Then, according to the type of energy that the systems capture, the sensors can be photographic, optical and microwave sensors.

Photographic systems are all those that capture images with cameras using photographic emulsions with a long-wave sensitivity of 0.3 to 0.9 µm (UV to IR). Optical systems are the sensors that work to capture images with long waves from 0.3 to 15 µm. Both systems capture the electromagnetic energy reflected or emitted by the ground, and the spectral response is recorded in the image.

The microwave system operates from 0.8 µm to 100 cm, the information or the content of each pixel of the images captured by the satellite is the result of the amount of beams of waves that return to the satellite, once they were emitted to Earth., the information is called backscatter. the study of them should have a big knowledge of the physic [30].

Process/event to be detected or monitored

In the optical-electronic system the by the optical components are decompose in several wavelengths. Each one is sent to in thar region of the spectrum that and converting in electric signal and finally in one numeric value. These values can convert in others values of radiance knowing the calibrate coefficients. The mission of the image spectrometers is to obtain images in a big number of spectral bands, for that obtain an almost continuous spectrum of radiation. The radar works for the band between 1mm and 1m. It works because artificial microwaves sent in a certain direction collide with targets and then the microwaves scatter. The scattered energy is received, amplified, and analysed to determine the location and properties of the targets, so it is possible to measure the time it takes for the radiation pulse to go and return, thanks to this the distance travelled can be known and generate a DTM. The radar could work in any weather condition, so it is a good option in cloudy areas [31].

Physical quantity to be measured

The resolution of the different satellites limits the monitoring of the infrastructure in the conservation evaluation in a general way, being impossible to detect structural problems in detail, in any case the objective is to detect changes over time, either for example movement mm/year (radar satellites) or visual changes of maximum 50 cm (optical satellites).

Induced damage to the structure during the measurement

As the images are taken remotely, this type of technique does not cause any damage during measurement.

General characteristics

Measurement type (static or dynamic, local or global, short-term or continuous, etc.)

The emission of radiation (emitted or reflected) from the earth's surface is a continuous phenomenon in 4 dimensions (space, time, wavelength, and radiance).

Measurement range

Due to this great diversity of measurements between satellites, there is a wide measurement range for each type of data used. Section 1.8 specifies the measurement resolutions obtained from the different satellites and therefore the existing ranges of extent.

Measurement accuracy

The above defines the four types of resolution used in remote sensing [30],[31] and therefore the measurement accuracy:

- Spatial resolution (pixel size): For photographic sensors, the resolution depends on the photographic scale and the flight height. For the optical-electronic sensors it also depends on the flight height of the platform, the scanning speed (interpreted as reading) and the number of detectors. In antenna sensors such as radar, it depends on the aperture radius of the antenna, the flight height, and the wavelength at which they work.

- Spectral resolution (indicates the number and width of the spectrum regions for which the sensor collects data): Among the space sensors the lowest spectral resolution corresponds to the photographic systems that operate in the visible and the radar that operates in the microwaves.

- Radiometric resolution (number of intensity intervals that can be captured): In photographic systems, the radiometric resolution is indicated by the number of grey levels captured by the film. In electro-optical sensors, the spectral resolution is given by the number of values that correspond to the digital levels that the sensor transforms analog-digitally.

- Temporal resolution: is the time that elapses between capturing sequential images detecting the same area.

Background

Currently it is easy to access a large amount of data, but in the beginning access to satellite information was inaccessible or too expensive. So, the evolution has been at the origin of a scarcity of data (in which, for example, users were only able to have a single image available per year) to currently having an excess of data available.

The first satellite, providing images for earth observation, was launched in 1972 through the LandSat program by the United States.

Europe, through the European Union and the European Space Agency (ESA) launched the first satellite of the constellation, Sentinel 1A, in 2014 through its Copernicus earth observation program, which constantly offers free images, that is one of the most important sources of data, available for users for their various analyses.

In addition, other international agencies have launched their own satellites, enriching the availability of information on the earth.

Currently, the rapid development of electronics, and increasingly smaller and cheaper, but more powerful computing devices, allows the launching of small satellites or nanosatellites (from private companies) that, added to the images available in the past, represent an exponential increase in the availability of Earth observation images, so currently the challenge is knowing how to use all this available information in an effective and useful way [32].

Performance

General points of attention and requirements

Design criteria and requirements for the design of the survey

Does not apply.

Procedures for defining layout of the survey

Does not apply.

To obtain the data or satellite images, it is necessary to analyze in advance what is the objective of the study to be carried out, depending on what it wants to measure it is better to use one type of satellite or another.

That is why it must be clear about the different resolutions that the different satellites on the market have, as well as the type of data capture (if they are optical or radar satellites, etc.).

Start from this first decision, it must be considered that the meteorological conditions of the earth affect the data capture. It is therefore necessary to review or filter the choice of the day of the image that is intended to be used.

Finally, it is necessary to know the different data sources, where the images can be downloaded for use, whether public or private.

Sensibility of measurements to environmental conditions.

- Atmospheric conditions: Changes in the atmosphere, sun illumination, and viewing geometries during image capture can impact data accuracy, and result in distortions that can hinder automated information extraction and change detection processes. Humidity, water vapor, and light are common culprits for errors and distortion.

- Altitude and reflectance: Light collected at high elevation goes through a larger column of air before it reaches the sensor. The result is surface reflectance, a phenomena which can diminish color quality and detail in images.

Preparation

Procedures for calibration, initialization, and post-installation verification

Does not apply.

Procedures for estimating the component of measurement uncertainty resulting from calibration of the data acquisition system (calibration uncertainty)

Does not apply.

Requirements for data acquisition depending on measured physical quantity (e.g. based on the variation rate)

- Study of the environment in which the object / infrastructure is placed.

- Choice of the type of satellite image appropriate for the case study.

- Study of the number of images required for analysis.

- Analysis of the climatic conditions for the study area and discarding the satellite images of the day the image was taken and there were no good climatic conditions.

- Choice of the download platform, as well as the choice of programs to deal with them.

Performance

Requirements and recommendations for maintenance during operation (in case of continuous maintenance)

Since a user of the satellite data cannot carry out the maintenance work of the satellites or the image data sources, the best recommendation that can be given is the continuous verification of the availability of the product that is to be used, always considering the market images alternatives.

Criteria for the successive surveying campaigns for updating the sensors. The campaigns include: (i) Georeferenced frame, i.e. the global location on the bridge; (ii) Alignment of sensor data, relative alignment of the data collected in a surveying; (iii) Multi-temporal registration to previous campaigns; and (iv) Diagnostics.

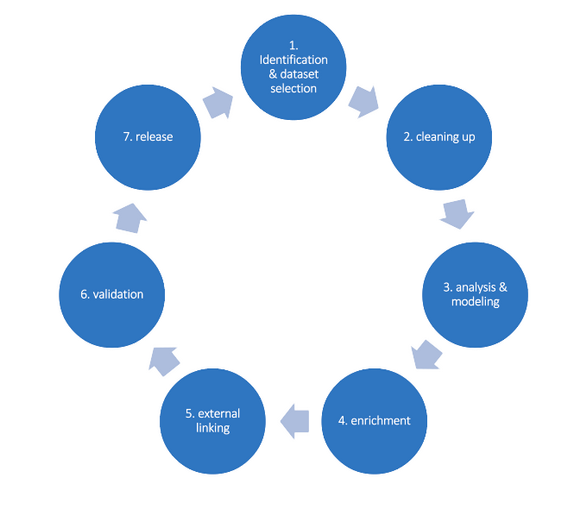

Due to the great diversity of data sources and the different types of data, it is recommended to try to define an ontology in a "standardized" way as follows [33] in Figure 35:

Reporting

Does not apply.

Lifespan of the technology (if applied for continuous monitoring)

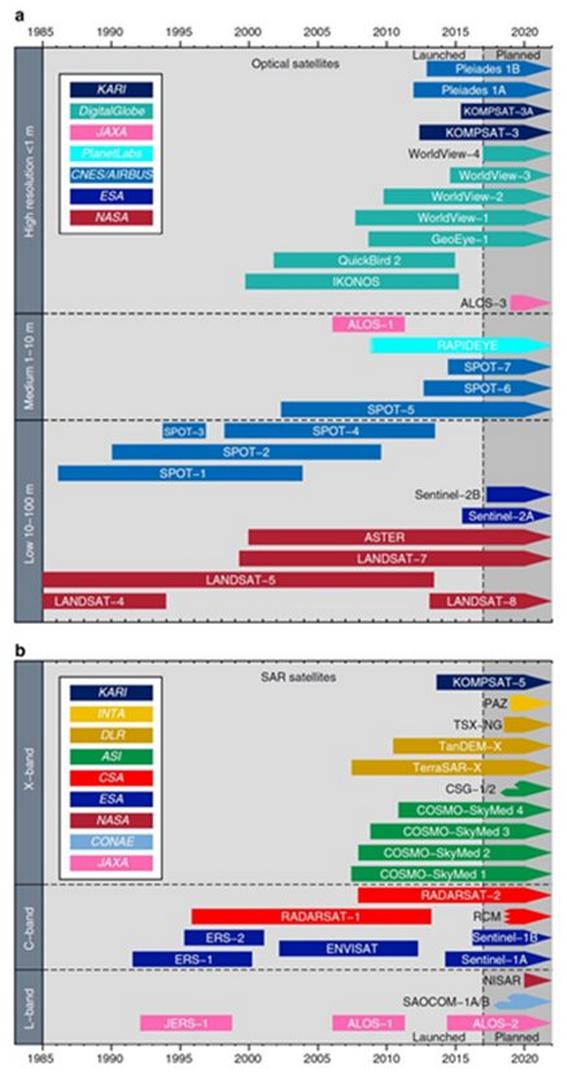

It is necessary to know the useful life in Figure 36of the different satellites that exist in the market.

Many of them extend their useful life but it is also possible to start the analysis with images from a satellite and that the analysis cannot be continued due to the lack of more images. In this sense, a good alternative is the combination of images from different satellites for analysis.

Interpretation and validation of results

Expected output (Format, e.g. numbers in a .txt file)

Due to the diversity of satellites, and their different uses and technologies, there is a great variety of file output formats, which are very difficult to unify. However, there are several commonly used files that can be considered standardized formats [35],[36]:

HDF5.Addresses some of the limitations and deficiencies in old versions of HDF to meet with the requirements of the current and anticipated computing systems and applications. The improvements in HDF5 include larger file size, more objects, multi-thread and parallel I/O, unified and flexible data models and interfaces, etc. Although inheriting the old version numbering, HDF5 is a new data format and is not back compatible with old versions of HDF. HDF5 consists of a software package for manipulating an HDF5 file, a file format specification describing low-level objects in a physical disk file, and a user’s guide describing high-level objects as exposed by HDF5 APIs. 7.4.1 The Physical Layout of HDF5 At lowest level, an HDF5 file consists of the following components: a super block, B-tree nodes, object headers, a global heap, local heaps, and free space. The HDF5 file physical format is specified with three levels of information. Level-0 is file identification and definition information. Level-1 provides information about the infrastructure of an HDF5 file. Level-2 contains the actual data objects and the metadata about the data objects (NCSA, 2003c).

The National Imagery Transmission Format (NITF) is designed primarily by the National Geospatial-Intelligence Agency (NGA), formerly named National Imagery and Mapping Agency (NIMA) and is a component of the National Imagery Transmission Format Standard (NITFS). It is adopted by ISO as an international standard known as Basic Image Interchange Format (BIIF) (ISO/ IEC 12087-5). NITF is aimed primarily to be a comprehensive format that shares various kinds of imagery and associated data, including images, graphics, texts, geo- and non-geo-coordinate systems, and metadata, among diverse computing systems and user groups. The format is comprehensive in contents, implementable among different computer systems, extensible for additional data types, simple for pre- and post-processing, and minimal in terms of formatting overhead.

The Physical Layout of NITF. The top level NITF file structure includes a file header and one or more data segments which can be image, graphics, text, data extension, and reserved extension.

TIFF and GeoTIFF. The Tagged-Image File Format (TIFF) is designed for raster image data. It is primarily used to describe the unsigned integer type bi-level, gray scale, palette pseudo color, and three-band full color image data but can also be used to store other types of raster data. Although TIFF is not considered as a geospatial data format, its extension, GeoTIFF, which includes standardized definition of geolocation information, is one of the most popular formats for earth observing remote sensing data.

The TIFF physical layout includes four components: (1) an 8-byte TIFF header containing byte order, TIFF file identifier, and the offset address (in byte) of the first Image File Directory (IFD) in the file; (2) one or more IFDs, each containing the number of directory entries, a sequence of 12-byte directory entries, and the address of the next IFD; (3) directory entries each having a tag number indicating the meaning of the tag, a data type identifier, a data value count containing number of values included in this tag, and an offset containing the file address of the value or value array; and (4) the actual data of a tag. Because the offset is of 4-byte size, the actual value of a tag is directly put in the offset field if and only if there is only one value and the value fits into 4 bytes.

Interpretation (e.g. each number of the file symbolizes the acceleration of a degree of freedom in the bridge)

Depending on the analysis that one wants to make of the infrastructure, you must choose the type of satellite to use since the data will contain different information for the information of each pixel of the image, for example, the optical images have different information in its pixels to the radar image for the same study area or target.

For example in Table 9, the European agency has the Copernicus program that has a constellation of satellites called Sentinel. To monitor infrastructures with the Copernicus program, only Sentinel-1 (radar images) or Sentinel-2 (optical images) are usually used.

| Sentinel-1 | Sentinel-2 | |

| Launch A-unit/B-unit | 2013/18 months after A-unit | 2013/18 months after A-unit |

| Design lifetime per unit | 7.25 yrs (consumables for 12 yrs) | 7.25 yrs (consumables for 12 yrs) |

| Orbit | Sun-sync, 693 km/incl. 98.18/LTAN 18:00 | Sun-sync, 786 km/LTDN: 10:30 |

| Instrument | C-band SAR | MSI (multi-spectral-instrument) |

| Coverage | Global/20 min per orbit | All land surfaces and coastal waters + full med. sea between: − 56 and + 84° latitude, 40 min imaging per orbit |

| Revisit | 12 days (6 days for A- and B-units) | 10 days (5 days for A- and B-units) |

| Spatial resolution/swath width | Strip mode: 5 × 5m/80 km interferometric wide-swath mode: 5 × 20m/250 km (standard mode) extra-wide-swath mode: 20 × 40 m/400 km wave mode: 5 × 5m/20 × 20 km | Depending on spectral band 10–20–60 m/290 km |

| Spectral coverage/resolution | 5.405 GHz — VV + VH, HH + HV | 13 spectral bands: 443 nm–2190 nm (incl. 3 bands at 60 m for atmos. corr.) |

| Radiometric resolution/accuracy | 1 dB (3 s) | 12 bit/ < 5% |

These characteristics mean that, for example, for monitoring with Sentinel-2 is usually used only to visually detect the effects of collapse, whereas for Sentinel-1 is usually used to constantly monitor the movement-displacement of the infrastructure surface.

Validation

Specific methods used for validation of results depending on the technique

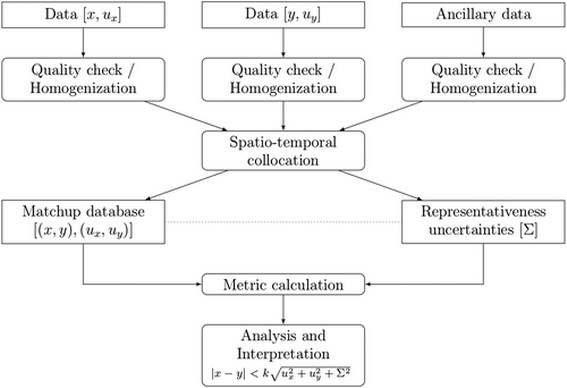

Different technical approaches have been developed in the Earth Observation (EO) communities to address the validation problem which results in a large variety of methods as well as terminology, in the following shows the generic structure of the comparison part within a validation process [38] in Figure 37:

Quantification of the error

It is important to clarify here what exactly is understood by the terms “error” and “measurement uncertainty” are often used interchangeably within the scientific community. The VIM defines the measurement uncertainty as a nonnegative parameter describing the dispersion of the quantity values attributed to a measurand. The measurement error on the other hand is the difference between the measured value and the true value, i.e., a single draw from the probability density function (PDF) determined by the measurement uncertainty. The measurement error can contain both a random and a systematic component. While the former averages out over multiple measurements, the latter does not. Uncertainties in the reference and EO measurements are derived from a consideration of the calibration chain in each system and the statistical properties of outputs of the measurement system [38].

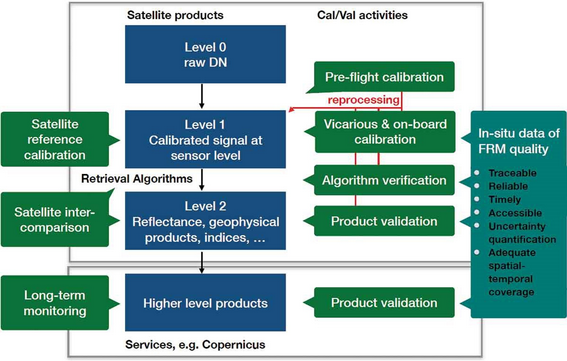

Quantitative or qualitative evaluation

The CAL-VAL process in Figure 38starts already before the launch of the platform because it's the unique opportunity where can directly calibrate and characterize physically the satellite, After the launch continues this process directly to obtain Level 1 and 2 data reliable and calibrate. The CAL-VAL of one mission includes the sensor calibration, verify the algorithm, the geophysical data validation and the intercomparison with other missions, all of this going to the uncertainties quantification. This process can be better through the comparison of multiple independent sources so that confidence is generated in the veracity of the data [39].

Detection accuracy

In addition to the factor's mentioned in section 1.4.1.4 (Atmospheric conditions and Altitude and reflectance), there are others that can trigger incorrect satellite images [40]:

- Documented metadata for cross-referencing

- False accuracy is a problem. Good data practices involve regularly layering and cross-referencing data sets against existing data to pinpoint errors and ensure accuracy.

In addition to these factors, the precision is determined by the resolution of the images, since the more pixels (resolution) an image has, the more detailed it is [41] in Table 10.

| Resolution Satellite | Approximate Accuracy of

Satellite

|

| 0.31 m | < 5.0 m |

| 0.41 m | 3.0 m |

| 0.55 m | 23 m |

| 0.82 m | 9 m |

| 1.50 m | 35 m |

| 0.40 m | 7.8 m |

| 0.50 m | 9.5 m |

However, the precision of an image is not directly related to the resolution and is specified less often (and less clearly) than the resolution of an image [41].

Advantages

The use of satellite images offers a series of advantages over other technologies such as:

Wide geographical and temporal coverage of the study area.

Access to free information depends on the resolution you want to reach.

Possibility, depending on the satellite employee, to obtain images under any climatic and geographical conditions and therefore high accessibility of information.

Easy to complement or combine with other on-site techniques.

Disadvantages

High-resolution images often come at a high price.

The satellite images require a large storage capacity, as well as, to process them a great demand for computational performance.

Need for experts for its use and interpretation.

Depending on the type of satellite image and weather conditions, certain images may not be valid for use.

Possibility of automatising the measurements

In the conception of the operation of all satellites, a periodic automation of data collection is already established, an exception of data capture at express request (such as natural disasters).

| Satellite | Sensor | Spatial Resolution | Temporal Resolution | Free or Charge |

| Landsat | MSS+TM (Landsat-5)ETM+ (Landsat-7)OLI (Landsat-8) | 30 m | 16 days | Free |

| Terra/Aqua | MODIS | 250–1000 m | 1–2 days | Free |

| HJ-1A/B | CCD1/2 | 30 m | 2–4 days | Free |

| SPOT | HRV (SPOT1~3)VGT (SPOT-4)HRG/HRS/VGT (SPOT-5) | 1 km | 1 day | Charge |

| Sentinel-2 | MSI | 10–20 m | 5 days | Free |

| Sentinel-1 | SAR | 5–40 m | 12 days | Free |

| COSMO-SkyMed | SAR | 3–15 m | 16 days | Charge |

| TerraSAR-X | SAR | 3–10 m | 11 days | Charge |

| ENVISAT | ASAR | 20–500 m | 35 days | Free |

| RADARSAT-1 | SAR | 10–100 m | 24 days | Charge |

| RADARSAT-2 | SAR | 3–100 m | 24 days | Charge |

| ALOS-2 | PALSAR-2 | 25 m | 14 days | Charge |

For the automation of downloading images by the user, there are numerous tools and freely accessible codes to put it into operation (example: Google Earth Engine, DIAS, USGS Earth Explorer, etc)

Barriers

The resolution of the images has a limit, in which certain infrastructure monitoring jobs require overcoming them, making their use unfeasible.

Certain satellites are restricted for the civil use of their images.

Existing standards

Since the 1990s, many national and international organizations have participated in the development of spatial data and information infrastructures for facilitating the sharing of spatial data and information among broad geospatial data producers and consumers and for supporting geospatial applications in multiple disciplines. Since remote sensing is one of the major methods for acquiring geospatial data, remote sensing standards are always one of the core standards for construction of any spatial data infrastructure.

For example:

- The National Spatial Data Infrastructure (NSDI) initiative of the United States is using the remote sensing standards discussed in this encyclopaedia entry for the construction of NSDI (FGDC 2004).

- Internationally, the intergovernmental Group on Earth Observations (GEO) is leading a worldwide effort to build a Global Earth Observation System of Systems (GEOSS).

According to the principle of information engineering, the remote sensing standards can be classified into four general categories based on the subject a standard tries to address: Data, Processes, Organizations and technology [42].

Applicability

Relevant knowledge fields

The purpose of the analysis will be linked to the sensor available on board the satellite. Each satellite has one or more instruments that allow obtaining conventional optical images, radar data, presence of pollutants, temperatures, etc.

That is why, focused on the monitoring of infrastructures, the following topics can be considered as the fields of action of satellites:

- Civil Engineering

- Geosciences

- Civil and environmental protection

- Climate change

- Archaeology

- Urbanism

Performance Indicators

- Cracks

- Obstruction/ impending

- Crushing

- Debonding

- Holes

- rupture

- holes

- Displacement

- Deformation

Type of structure

Bridges, roads, railways, buildings, docks or ports, airports, etc.

Spatial scales addressed (whole structure vs specific asset elements)

Infrastructure monitoring is more efficient and advisable for big study areas since this is where this technology is really advantageous compared to other types of on-site technologies.

Materials

Environment in general

Available knowledge

Reference projects

SIRMA:

Strengthening infrastructure risk management in the Atlantic Area

Other

https://skygeo.com/ → Radar images for mining, energy, civil engineering, underground gas storage

https://kartenspace.com/ → satellite monitoring for LINEAR INFRASTRUCTURES, AGRICULTURE FIELDS, MINES, LOGISTIC CENTRES, FOREST, NATURAL DISASTERS, CITIES, OCEANS & PORTS

https://www.orbitaleos.com/ → satellite monitoring for Urban Planning, Deforestation, Infrastructure Monitoring, Gas Leaks, Catastrophe Claims, Power Lines and others.

http://dares.tech/ → Radar images for Mining, Infrastructure, Oil and Gas

https://site.tre-altamira.com/ → Radar images for Mining, Oil and Gas, civil engineering, geohazards

https://satsense.com/ → Radar images for Residential & Commercial Properties, Insurance, Infrastructure and Geotechnical